High-End Audio

Science or Snake-Oil?

In 2006 I was invited to a local high-end audio store’s annual show where I had a chance to listen to an unusual demo. A salesman for expensive turntables, along with an editor of Stereophile fame, tried to convince the audience of the inferiority of CDs compared to vinyl records. Equipment were top of the line Wilson Audio speakers ($135k/pair), VTL tubed monoblock power amplifiers and associated pre-amplifier ($60k, if memory serves), and speaker wires that could make your average garden hose jealous (I’ll come back to those esoteric speaker wires below).

In the first part of the demo, the turntable salesman played a record he had bought the night before, in a second hand record store in the University district. Before lowering the needle into the grooves he expressed his joy and pride about his find ($5, no k here). It sounded like a used record: Dusty. Would it hurt to clean the record before playing it on a stereo that cost more than my three bedroom house in 1999? I tried to listen to Charlie “Bird” Parker, ignoring the pops and clicks of the vinyl, and there was a saxophone in a club. Not a particularly enjoyable listening experience, but it sounded like a saxophone nonetheless.

In the second part of the demo the salesman played a CD he had made from the above record, also the night before. Something like connecting the line-out of the phono stage to the line-in of the laptop, have the laptop quantize the vinyl, clicks and pops and all, such as not to make the comparison too obvious, and then play it back through similar means. As far as I remember, it was level matched, and the track sounded just like the record. It was, after all, a faithful, digital copy of the record. Once the saxophone began to play, the difference became painfully obvious. The crude analog-digital-analog conversion process easily managed to ruin my listening experience even more. Behind all the pops and clicks the saxophone sounded synthetic. Somehow the audience was left with the worst of both worlds.

Two things are blatantly wrong with this demo:

- It is not a double-blind AB test.

- It compares apples with oranges.

While error 1 is obvious and I tried hard to not get pre-conditioned, error 2 is a little bit more difficult to illustrate, particularly since the salesman made a seemingly laudable effort to change one and only one parameter: digital vs. analog playback. Had the CD been made from the analog master tape, without all the clicks and pops of the dirty record, the CD would have been way too easy to identify. The perfidious part of the error was to use a rather crude analog-digital-analog conversion in the process. If state-of-the-art digital recording and playback equipment had been used, I bet the CD copy of the record would have been virtually indistinguishable from the vinyl record itself, with authentic clicks and pops and all (I’ll come back to the analog-digital-analog round trip

below). After the demo, and after most of the audience had left the room, I pointed this out to the salesman, and he used many words to tell me that he didn’t disagree (which is sales-talk for “I agree but you should shut up now”).

I’d like to emphasize that the above should not be misconstrued to mean that I find anything wrong with Wilson speakers or VTL amplifiers. I had a chance to talk to a soft-spoken David Wilson about several of his speakers. He takes an almost holistic approach—holistic in Aristotle’s sense that the whole is more than the sum of its parts. It’s not merely “the magnets,” as a baggage handler at SLC airport strongly suspected, it all has to work together like a symphony orchestra. I have no reason to assume he is not sincere about what he is doing.

Compared to other components of the audio chain, speakers are most likely subject to some kind of compromise, which I plan to illustrate elsewhere on this web-site. From a customer’s point of view, these compromises may include size and notably price. I simply don’t have $135k to spare for a pair of speakers, however well-engineered they may be and however good they may sound. And if I had that kind of money, the engineer in me would probably want to know a few more things about what he’s getting for it. Alas, tough for the enthusiast DIYer, many of these things are well-kept trade secrets...

The all-tube amplifiers open a different can of worms. Tubes (or valves) represent early forms of semi-conductors. By the time transistors were invented and made their ways into audio components, designing amplifiers with tubes was reasonably well-understood. By contrast, early transistor amplifiers were prone to failure[1], and if they worked, they “sounded like transistor amplifiers.” Accordingly, any serious audio enthusiast would stick to tubes.

What went wrong? (Bear with me here; I’m not an expert in amplifier circuitry, neither with tubes nor with transistors, so I’ll keep it simple.) Tube amplifiers produce mainly even harmonics when they distort, and the onset of distortion is gradual when the amplifier is driven beyond its limits. While it is a kind of distortion—something added to or removed from the original signal, thereby compromising fidelity—it is typically perceived as much less objectionable as odd harmonics. Modern guitar amplifiers continue to use tubes for this particular effect. By contrast, transistor amplifiers produce odd harmonics, and the onset of distortion is abrupt when driven beyond its limits. For a transistor amplifier of similar nominal power as a tube amplifier, this put the early transistor amplifiers at a clearly audible disadvantage.

Moreover, when compared to tubes, transistors are in some sense much faster. While this could be an advantage, early circuitry apparently wasn’t aware of the consequences of their speed. In turn, this lead to failures or—even before failure—to a kind of distortion that would eventually be known as transient intermodulation distortion (TIM). While it was relatively easy to construct transistor amplifiers with much higher power than equivalent tube amplifiers, and while it was eventually possible to safe-guard transistors from “blowing up,” the phenomenon of TIM somehow escaped the capabilities of early measurement equipment.

The inexplicable consequence at the time was that some people could hear a difference between two kinds of amplifiers that measured identically. Eventually the puzzle was solved[2][3][4], but by that time some permanent damage must have been done already: Tubes were perceived to sound better than transistors. This perception persists today, even though today one could reasonably argue that if one amplifier sounds different than another one, then one of the two (or possibly both) must be altering the input signal in a perceptible way, which would be an insufficient implementation of the respective concept.

Much like in the above demo, the error was the result of comparing apples and oranges. A reasonably matured implementation of the tube concept was compared with an immature implementation of the transistor concept. In turn, this may have helped to open the door for cultivating inordinate amounts of subjectivism[5]. If objective science failed then, let’s try to hear other differences that can’t be measured, or even better, let’s make customers believe that there are audible differences when there are none.

This gets me to the speaker wires of the above demo: These unwieldy hoses of copper strands and transparent insulation looked impressive, alright. I was curious about the tin can pieces somewhere between the amplifiers and the speakers. They reminded me in size and shape of a muffler. Naturally, it was impossible to find out just what they would be good for—the technobabble was simply too opaque. But they made a “huge difference” according to the salesperson of the audio store. How much do they cost? Something about “soundstage” and “transparency.” Yes, but how much? Well, compared to what they do, they are a real bargain. How much?

$80k. Eighty thousand dollars for a pair of speaker wires!? I double-checked, just to make sure. Numbers can be surprisingly difficult in a foreign language, particularly around high-end voodoo. It was indeed eight-oh thousand, not “merely” eight-een thousand. This is bunk, plain and simple. If your speaker wire has sufficient diameter for the ampères drawn by your speaker, it will do just fine. I paid 89¢/ft for “Home Depot’s best” (12 AWG[6] or about 3.3 mm² in cross-section) and this is likely much more copper than I need.

What is worse, marketing can make people believe things to the point where they hear things as soon as they see the allegedly inferior thing or speaker wire[7]. I have personally heard about “directional” speaker wires sold by another local audio store, the wires having little arrows on the insulation indicating which way the current must flow to get to the speakers. Hello? If the wire was conducting electrons in one direction only, it would be a semi-conductor, and I sure would not want a semi-conductor as a speaker wire (for the myth about equal lengths of speaker wire, see Speaker Wires seen as a Low-Pass Filter).

I also read about special green marker pens with which you’ll have to paint the edge of your CDs. This supposedly prevents stray reflections from the red laser because complementary colors somehow mutually annihilate, but only if it is that special green dye. Say what? If we had to go to such lengths to “prevent” the laser from erratic readings, then that particular laser would be hopelessly out of aim and out of focus to the point where it wouldn't read anything on the CD in the first place[8].

Now, all of the above is not to be misconstrued that all high-end audio is bunk. Au contraire. The ability of an audio chain to reproduce a recording with the highest fidelity is an experience I try to find time to enjoy on a daily basis. Except, of course, for the speakers, which I chose to build myself. This choice is in part to counter-balance the much more abstract engineering involved in computing sciences[9], in part because my first degree is in experimental physics and I enjoy experimenting and finding things out, and in part because all speakers have to compromise if only for reasons of physics. Building the speakers myself lets me make my own choices of compromises—at least to the degree that I understand the theory behind speakers.

The problem remains, though, to separate the wheat from the chaff: What’s science, and what’s snake-oil? Even for a fairly technically minded consumer, the answer can be very difficult, if not impossible. Take, for instance, my $79 Sony DVD player. Apart from DVDs, it can play CDs as well, but so can a $34k three-box dCS Verdi/Purcell/Elgar stack. To wit, both play the same red book CD in PCM format[10] sampled at 44.1 kHz and 16 bits per sample. How to explain the price difference? 16 bits are 16 bits in either player, right?

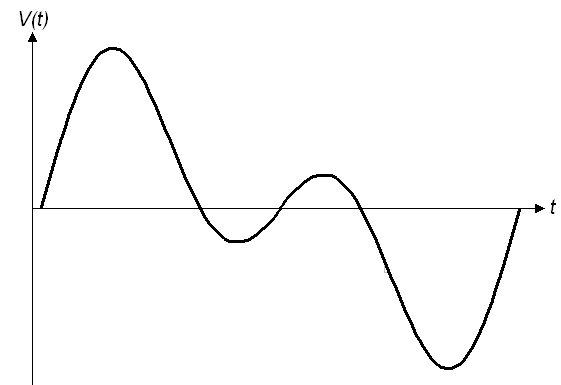

Yes, but while digital has many inherent advantages—in the end, all boils down to the simple task of telling zeroes from ones—digital is only part of the equation. Somewhere in the recording process, the analog voltage received from the microphones...

An example of analog voltage: a smooth curve that indicates voltage at every point in time for the duration of the example (smooth to the degree that I can draw it with individual pixels)

...has to be converted to digital, to be stored on the hard disk—the modern reel-to-reel tape. In the playback process, digital has to be converted back to analog voltage to be sent to the speakers.

In theory, the Nyquist-Shannon sampling theorem[11] states that this round trip can be done exactly if the highest frequency involved is less than half the sampling frequency. The keyword here is in theory, because in practice, this requirement is not met for standard CDs, and other requirements of the theorem can at best be approximated. To the degree that I understand the overall process, I'll try to illustrate the practical consequences thereof.

First, for the theory to be applicable, any frequencies above 20 kHz have to be filtered out, and rather brutally so[12]. Now, loosing these frequencies doesn’t unduly worry me—I can’t hear them anyway. But the sharp or steep filter necessary to eliminate these frequencies—also referred to as brick wall anti-aliasing filter—is part of the practical problem, because it alters the timing of some frequencies relative to others. The timing

gets smeared

even before the recording is reduced to zeroes and ones[13].

Next, the time-smeared

voltage from the microphones has to be measured

at a regular pace—44100 times per second to be exact. This requires some kind of ultra precise timing mechanism, like a clock, and a measuring instrument that reads

the voltage at every tick of the clock.

An example of converting analog voltage to digital: The voltage is measured at regular intervals in time and each measurement is converted to a number.

Clocks are probably the lesser problem, as quartz watches are cheap and precise these days. An atomic clock would be as good as it gets, but I am not aware of such a fancy PCM recorder. Digital volt meters have come a long way since I bought one some 25 years ago, although measuring is never exact: This is one of the basics they teach you in physics, and I certainly remember this to this day. Let's just assume that PCM recorders can measure well enough for practical purposes.

Finally, the measured voltages taken at quick paces have to be converted to numbers so they can be registered as zeroes and ones. For standard CDs, combinations of 16 zeroes and ones (binary digits, or bits) are used to represent numbers. This allows for numbers in a range of roughly −32000 to +32000. Loud parts of music translate to numbers that vary between large negative and large positive numbers, while soft parts of music produce smaller variations. The following table gives a rough idea how the numbers relate to bits, loudness (in decibels, dB), and—very loosely—to indications of musical dynamics (I'm aware that e.g. the fortissimo of a string trio does not translate to the same level of loudness as the ff of a full fledged symphony orchestra):

| Musical dynamics |

Loudness | Number of bits needed |

Range of numbers represented |

| fff | 110 dB | 16 bit | −32000 to +32000 |

| ff | 100 dB | −10000 to +10000 | |

| f | 90 dB | −3200 to +3200 | |

| mf | 80 dB | 11 bit | −1000 to +1000 |

| mp | 70 dB | −320 to +320 | |

| p | 60 dB | −100 to +100 | |

| pp | 50 dB | 6 bit | −32 to +32 |

| ppp | 40 dB | −10 to +10 | |

| 30 dB | −3 to +3 | ||

| 20 dB | 1 bit | − or + |

Comparing loudness with bits and numbers on a CD

The problem with the number conversion is that there are not always enough numbers: The numbers are integers without fractional parts; hence each measured voltage has to be converted to the nearest integer number, which introduces some kind of a rounding error (quantization error). Now, if 9999.5 gets rounded down to 9999 or up to 10000, that's hardly a reason for much concern. It's fortissimo either way. However, if 9.5 gets rounded down to 9 or up to 10, because there are no numbers between 9 and 10, this may loose detail in soft passages. It is as if the softer it gets, the fewer bits are used for recording. In turn, the fewer the bits, the more a single bit becomes relevant, especially if it is the result of a rounding error.

It would be difficult, if not impossible, to demonstrate this with sound samples over the internet, since I don't know anything about the sound card in your computer, nor about your computer speakers. Hence I'll try an analogy with photography. Following are two crops of a photo of Mount Rainier at Sunrise. The top one is a straight crop from the postcard sized image, while the bottom one is a crop from the corresponding thumbnail sized image, which I then enlarged to match the postcard sized image:

An example of loss of detail: The same image represented with sufficient (top) and insufficient (bottom) bits for displaying at this size.

The thumbnail was represented with fewer bits or pixels than the postcard. After enlarging it—like turning up the volume on your stereo—the loss of detail is obvious. Now, instead of viewing your computer screen from up close, back off to about 5 times that distance[14]. Both images are now reduced to the size of the thumbnail—like turning down the volume. Do you still notice much of an obvious difference?

Granted, this is not an exact analogy[15], but there is a good deal of similarity with sound. When the symphony orchestra playing on your stereo at realistic volume levels has your undivided attention, you may hear oddities with soft violins or flutes recorded on standard CDs. When you're being sprinkled

with Muzak in an air-conditioned elevator, you may instead find it hard to tell violins from flutes in the first place.

Let's turn back to the Nyquist-Shannon return trip. The music is now converted to zeroes and ones, with all the aforementioned potential for loss and damage. At this point, the playback device has the formidable challenge to convert the numbers back to voltage. This challenge is so formidable because the theory again comes with some requirements that cannot be met in practice[16], and because some of the damage simply cannot be repaired at this point. It can at best be mitigated.

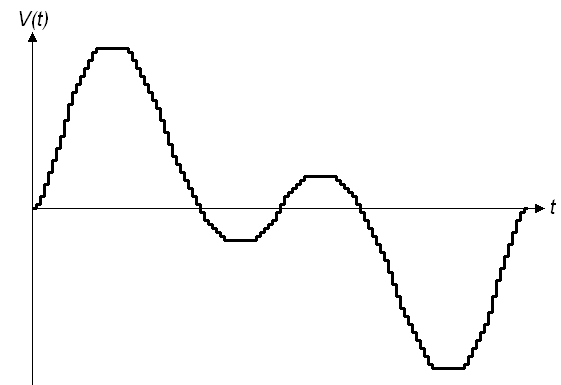

The way I understand this, the earliest CD players essentially reversed

the above process. For every number on file

they would switch

to the respective voltage. The resulting curve was anything but smooth:

An example of converting digital to analog voltage: At regular intervals, for each number on file

a switch is thrown to the corresponding voltage

What did it sound like? At the time, it sounded eerily clean to me; certainly compared to my turntable or reel-to-reel tape: No clicks and pops, and no tape hiss. When it was quiet, it was really quiet, and the on-set of sound was like an appearance from vacuum. It was around the time I first heard Friday Night in San Francisco on the radio[17], which I argued I won't be able to listen to on a turntable, hence I had to get a CD player, along with said CD[18].

By the end of the eighties, having just started my dissertation in computing sciences, I heard about 20 bit 8 times oversampling

CD players. Highly suspicious about a potential marketing gimmick, I went to the local Radio & TV specialty store to find out. In retrospect, I almost feel sorry for the salesperson I quizzed at the time: There are 16 bits on my CD, are you making up the other 4 bits? There can't possibly be any new information in these bits!

The salesperson didn't have a sufficiently technical explanation to convince the self-confident aspiring computer scientist, but kindly gave me a demo; Gheorghe Zamfir playing pan flute with and without oversampling. The difference was uncanny; the version without oversampling sounded like someone was rustling with paper in the background. I thanked for the demo, grabbed the sales brochure, and tried to make sense out of the audible bits of nothingness.

Recall the curve resulting from the simple digital to analog voltage conversion. It is anything but the smooth original curve. But it could be made smoother

by adding or removing progressively smaller building blocks

like so:

An example of oversampling: digital is smoothed

by adding or removing progressively smaller building blocks

The extra bits are effectively just made up,

albeit in an educated way. The individual big steps

are replaced by many small steps.

The resulting curve looks as if measurements

or samples had been taken at 4 times the original rate, and as if there had been 4 times as many numbers as originally:

An example of analog voltage after a trip to digital and back: a close but inexact copy of the original analog voltage curve

Each doubling of the number of numbers

adds one bit per sample, hence two extra bits are needed in the above curve for 4 times as many numbers. Now imagine what this curve would look like with 4 extra bits for a total of 16 + 4 = 20 bits and 8 times the sampling rate, and—you guessed it—I had to upgrade.

Today, I think I know what I heard behind Gheorghe Zamfir's non-oversampled pan flute. Recall for the last time the stair-stepped curve resulting from the simple digital to analog conversion. The rapid switching

from voltage step to voltage step adds a whole spectrum of high frequencies to the analog result. If I understand this correctly, this spectrum extends both above and below the 44.1 kHz sampling frequency and—inconveniently so—some of it into the region below the 20 kHz threshold of audibility[19].

This requires once again a steep filter which—much to my confusion—is also referred to as anti-aliasing filter. I suspect in the early days these filters weren't steep enough, which in turn had me hear some aliasing noise

left over. Oversampling alleviates this problem because not only does it create a seemingly smoother

stair-stepping curve, it also pretends

a higher sampling frequency. At 8 times the 44.1 kHz rate, most of the aliasing noise

is moved to the neighborhood of 352.8 kHz[20].

The development of CD players hasn't stopped with oversampling. It was found eventually that making up all these extra oversamples

had to be done at a rate that was in perfect synchronization with the original sampling rate. Deviations from this lock-step

meant some kind of time-smearing

not unlike wow and flutter of a turntable (i.e. a turntable whose rate of rotation varies or oscillates over time, causing audible changes in pitch or artificial vibrato

).

Luckily, microchips and the development of software have made quite some progress since the late eighties, as well. For the last couple of years, CD players have replaced oversampling by upsampling. Simply put, there is now enough computer processing power available in specialized chips that can take the 16 bit numbers sampled at 44.1 kHz and produce many more 24 bit numbers out of them at a rate of 192 kHz (or higher).

I imagine this method requires a separate clock to time the output stream of bits quasi-independently of the timing of the input stream, as such would eliminate the synchronization problem of the oversampling method. The resulting asynchronicity, along with any look-ahead the software algorithm may require, can be addressed with a sufficiently large buffer of memory between the input and output streams[21].

I assume the upsampling algorithms used in the software are proprietary[22]. On the other hand, I would find it hard to believe that every manufacturer of CD players designs and implements their own software algorithms. This would be way too expensive in this day and age of optimizing profits. Accordingly, I wouldn't be surprised to learn that different CD players use the same chips. Alas, which one of these chips you'll get may be veiled by the technobabble of the marketing.

Now, on regular CDs, there are still only 16 bits per sample and 44100 samples per second, hence even the smartest software and the fastest chips can at best minimize the damage that has already been done (damage control). Does the amount of damage control warrant a price tag of $34k? Probably not; not for me, anyway—out of my price range. To be sure, upsampling is for real, but you should be able to find a CD player with 24 bit/192 kHz upsampling for much less than $34k. How much you're willing to spend to eke out the most of your existing CD collection, you'll have to decide that on your own.

Today, of course, instead of expensive damage control applied to an insufficient number of time-smeared bits, it is just as easy to produce more bits and at a higher sampling rate to begin with, hence the high-resolution formats like SACD and DVD-A. This doesn't eliminate quantization errors, and—strictly speaking—there's still a need for an anti-aliasing filter, but the kind of damage they introduce at their bit and sampling rates are going to become the least of your worries. It has simply become so easy and affordable to store that many bits![23]

REMEMBER: Digital is neither at fault merely because it's digital, nor a solution merely because it's digital.

The number of zeroes and ones make or break a realistic experience of a symphony orchestra.

To keep up with the symphony orchestra, the combination of amplifier and speakers should be able to reach undistorted[24] peaks of 110 dB SPL at your favorite listening position[25]. This cannot be done with a 10 Watt tube amplifier, no matter how lovely the tubes glow in the dark, particularly when exposed on top of a shiny brass enclosure. If this kind of audio jewelry gives someone pride of ownership, so be it; it won’t give a realistic experience of even a single grand piano[26]. This is plain arithmetic, and explains why I am highly suspicious of $6k+ flea-sized tube amps[27] or $6k+ speakers with “Hi-Fi” efficiencies around 87 dB/W/m and one or two 6½ inch woofers as midbass[28], and which is why I do part of the wheat from the chaff separation strictly by numbers.

I won’t claim that the above very basic set of numbers is comprehensive. Maybe I’ll compile a “shopping list” at some point or other. But even if I did, it would be difficult to verify if a particular device meets the criteria on my shopping list. Not all manufacturers are brutally honest with their specifications, nor are their specifications always complete. Two typical criteria to mislead the consumer are:

- Frequency response of speakers

- Efficiency of speakers

A speaker may claim response down to 30 Hz but without indicating to what extent the response is reduced at that point. For instance, if at the lower limit the speaker is 3 dB down, this is a fairly stringent criterion, but it nevertheless corresponds to reducing the sound to one half. Twice the amplifier power would be needed to make up for the loss at that point. Another criterion is the −10 dB point, reducing acoustic output to 1/10, and requiring 10 times the amplifier power. If the satin brochure doesn't say anything else, ask how the frequency response is determined.

Likewise, a speaker may claim 87 dB/W/m efficiency, which states that at 1 Watt input and measured at a distance of 1 meter, the output is 87 dB SPL. This represents a typical value for Hi-Fi speakers, but it may or may not be the full truth. Often, 2.83 Volt and 1 Watt are used interchangeably, and while 2.83 Volt driven into a load of 8 Ohms corresponds to 1 Watt, the speaker may not be 8 Ohms. Many popular speakers are actually 4 Ohms. This is nothing bad per se, but it represents a different load for the 2.83 Volt: It actually draws twice the ampères, and hence corresponds to a power of 2 Watts. The difference is 3 dB and therefore the speaker should be specified as 84 dB/W/m. In turn, you will want to look at the power that your amplifier can output into 4 Ohms, which (for reasons of amplifier design I’m not all that familiar with) typically is not twice the power your amplifier can output into 8 Ohms.

About that wheat from the chaff, remember? I don’t quite trust every store demo, I don’t exactly know what’s inside the devices, and I don’t take all the numbers or manufacturers’ specifications at face value. Given the large numbers involved, even the wildest enthusiast in me can’t possibly listen to all components on the planet and take double-blind AB tests with any (!) combination thereof. The ears get tired long before that. I do subscribe to Stereophile, and while I find their component measurements laudable, even Stereophile has to make money to pay their staff. I’m reasonably sure that my yearly $12.97 subscription amount covers only part of their cost of sending me 12 issues. The rest is covered by ads—you get the drift.

Measurements aside, what about the lengthy component reviews in Stereophile? Well, how do you describe with words what your ears have heard? Surprisingly often, component reviews read like reviews of exclusive or not so exclusive wines, but in either case the words can’t do justice to the facts. Instead, being hard to describe, and even harder to understand, they may rise an eye-brow or two. Besides, someone may be able to easily taste the difference between two Merlots of adjacent vineyards, while someone else may not even know that Merlots tend to be red wines. If you are so inclined, you could take a course in wine tasting, and with a bit of practice at least tell the Cabernets from the Merlots.

It’s not much different with speakers, given the compromises and physical constraints their designs may have to address. Few people I’ve talked to even begin to understand my obsession with speaker design and building until they have heard my modest attempts at sonic bliss for themselves. Once you get into it, you begin to appreciate what you have missed before, and eventually you begin to suspect what you may still be missing. It’s a vicious circle, albeit an exciting journey!

Like a composition by Beethoven that doesn’t seem to end, I seem to have a hard time getting to grips with separating the wheat from the chaff. It’s because there is no silver bullet. I merely try to absorb as much theory as I can understand to become “street savvy” enough to question the marketing and to not fall into too many “traps.” Particularly, I strive to learn what to listen for, and to understand what I just listened to. This is the most realistic explanation I can come up with for what are sometimes referred to as “Golden Ears.” It’s not that I think my ears are any more perfect than anybody else’s. The difference is that through these learning experiences I may some day be able to tell what’s wrong with a speaker in much the same way someone can readily identify the sound of period instruments or the way B. B. King plays a single note on his guitar named “Lucille.”

With all the science and numbers, how do I listen to music? In an oversized BarcaLounger, with dimmed lights, and often with my eyes closed. At this point, nothing gets in the way of the enjoyment. It’s like enjoying a filet mignon in a gourmet restaurant, with a Merlot having firm tannins, tangy cherries and tart berries—but without smoking area and without ill-behaved kids ransacking the place...

Bon appétit!

Footnotes

- Failure rates exceeding 50% weren't uncommon; see Lynn Olson's tribute to Bob Sickler.

- As far as I gather, Matti Otala first solved the problem in 1973. His design was implemented shortly afterwards by Electrocompaniet.

- Lynn Olson describes how Bob Sickler designed

the first US-made high-speed, low-TIM amplifier

[...]collected rave reviews.

- Matti Otala's wisdom soon found its way into the first high-power amplifier by Studer Revox of reel-to-reel fame.

- For a more detailed account of subjectivism in high-end audio, see Science and Subjectivism in Audio by Douglas Self.

- American Wire Gauge, a system to relate wire cross-sections to numbers. It may make sense to those who designed this system...

- Roger Russell of McIntosh fame has devoted a lengthy but entertaining article to speaker wires, notably with this anecdote on The Truth about Speaker Wire.

- About green pens and more, see the compilation by Peter Aczel called The Ten Biggest Lies in Audio.

- The field of my PhD degree; if you're ever bored, feel free to read my dissertation.

- Red Book CD is the jargon for conventional CDs, apparently named after the red color of the binders containing said standard, and PCM denotes its digital representation in general.

- If there were a system of beliefs in digital signal processing, the zeroth commandment would be the Nyquist-Shannon Theorem.

- Up to 20 kHz, we want the filter to do nothing, while from 22.05 kHz and above, we want the filter to remove

everything.

In practice,everything

means about 90 dB down, corresponding to the theoretical dynamic range of [signed] 16 bit numbers: With one bit used for the sign, each of the remaining 15 bits accounts for a doubling of the voltage sampled, or a ratio of about 6 dB [20·log10(2) = 6.02 dB, or 20·log10(215 − 1) = 90.31 dB]. The interval from 20 kHz to 22.05 kHz spans less than one full note, such as from C to D. The required filter slope therefore exceeds 640 dB/oct (!). - Doug Rife, of MLSSA fame, writes in his article Theory of Upsampled Audio, that

the digital audio data residing on a CD is already irreparably time smeared.

To get some degree of intuition fortime-smearing,

have a look at the group delay graphs vs. filter slope in this sequence of crossover simulations and compare the last filter of the series (96 dB/oct) with the brick-wall filter (640 dB/oct). - A normal distance from your computer screen is between 30 and 60 cm (1 to 2 ft). The shorter distance may be more comfortable for laptops, the longer for desktops, especially with 20 inch screens and larger. Hence for the experiment, back off to about 1.5 to 3 m (5 to 10 ft).

- If you are photographically inclined, revisit Mount Rainier at Sunrise and have a close look at the shadows. Some of these shadows don't have any appreciable amount of detail at all, even though I used a graduated neutral density filter (3 stops, if memory serves) to reduce contrast between the highlights and the shadows. The JPEG format used to show images on the internet does not have enough bits per pixel to represent more levels of brightness. At the time of the shot, I wanted to preview the effect of the filter before submitting to slide film, which has similar limitations of dynamics as do 8 bit/color jpegs. Had I shot in RAW format instead, the shot might have recorded more levels of brightness initially. Subsequently, in order to show the raw image on the internet, I would have had to convert it, at which point I would have faced an artistic decision: Do I compress the levels of brightness even more than I already did with the filter, or do I loose some detail in the shadows but maintain the overall drama? Recording engineers may face a similar dilemma when producing the mixdown for CD tracks, and in the process may (or may not) consult with the artists (composer, conductor, soloists).

- Essentially the convolution of a Dirac pulse train of infinite length with appropriately scaled and centered (infinite) sinc functions sin(π·x)/(π·x), which boils down to an integral that starts at t = −∞ and extends to t = +∞.

- Actually, my trusty Revox A76 FM Tuner. It needed a few replacement bulbs meanwhile, but continues to work just fine.

- Al DiMeola, John McLaughlin, Paco De Lucia, Friday Night in San Francisco, re-issued meanwhile as single layer SACD.

- Fourier transform of a train of boxcar functions yields shifted sinc [sin(f)/f] spectra.

- Some hair splitting may be in place here: Oversampling does not sample the voltage received from the microphones at a higher rate, and hence the potential for loss and damage during the analog to digital conversion remains. Oversampling does, however,

feed

the digital to analog conversion at a much higher sampling rate, havingmade up

all these progressively smallerbuilding blocks.

- The sheer cost of the necessary memory chips would have rendered such a solution unfeasible for early CD players. Sampling at 192 kHz and 24 bits per sample for two audio channels (stereo) manipulates 2·192,000·24 = 9,216,000 bits or 1,152,000 bytes per second. That's more than one Megabyte every second. By comparison, my first IBM PC had a total of ¼ Megabyte main memory.

- In case there is much audible variation in the way someone can implement the respective sample rate conversion in the first place, I imagine it to be not unlike that of

rezzing up

a digital image with aBicubic Smoother,

Fractal,

or other interpolation method. - Hence I think it is an exercise in futility to compress 1000

songs

onto your MP3 player unless, of course, you can't live without Muzak. - For amplifiers, less than 0.1% distortion at all power levels and frequencies is acceptable; for speakers, 1% at full power is exceptional.

- Stereophile editor John Atkinson measured peaks close to 110 dB SPL; see also the Technical Background article by Musical Fidelity.

- Unless the speakers are big horn speakers, filling the entire basement (!) with low frequency horns, and have an efficiency of 110 dB/W/m. I have seen pictures of enthusiasts who took this kind of approach.

- The Feb 08 issue of Stereophile reviews a 12 W stereo amplifier at that price point. Frequency response is several dB down at both 20 Hz and 20 kHz, and distortion becomes audible already at about 100 mW. In fact, if I read John Atkinson's graphs correctly, distortion is around 10% at 12 W into 8 Ohms. With these numbers, it doesn't make it onto my shopping list, even without auditioning it.

- This includes notably my own North Seas project with material costs around $1.5k—I am learning and plan to improve on this in my next project, which I'll have to build by the numbers, simply because I won't be able to audition it until it's built...